-

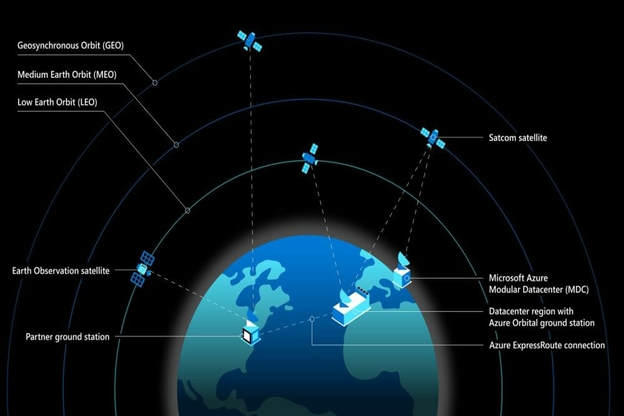

Azure Space in its Entirety

DATE:The space community is quickly expanding and innovation is decreasing the hurdles to entry for both public and private-sector organisations. Microsoft’s goal is to make space networking and computing more accessible to businesses, especially for industries such as agriculture, energy, telecommunications, and government. For this purpose, Microsoft is working with SpaceX to provide satellite-powered Internet …

-

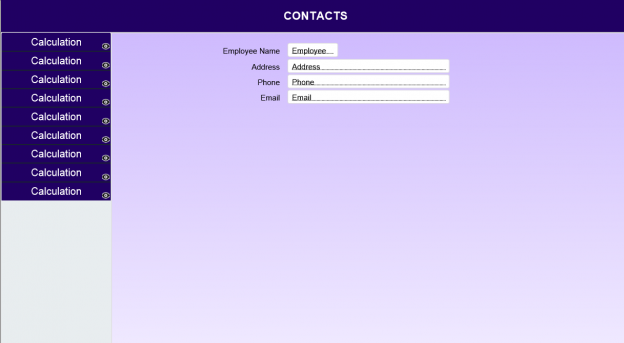

Guide to Avoid Navigation Rework in FileMaker

DATE:Did you ever encounter the turmoil of adding a new module on all layouts post creation of standard menu navigation? Well, we all know how bothersome that could be! Here we are with an easy solution, read along… Undoubtedly, navigation is an integral part of every application. It plays a pivotal role when a user …

-

A Developer’s Tale: Using Microsoft technologies to integrate Myzone device with Genavix application

DATE:It is no secret that digital technology is slowly shaping the future of the healthcare industry. The use of fitness tracking devices has grown rapidly, the philosophy being ‘that which gets measured can be improved’. The MetaSys team has worked on integrating tracking devices with Fitness and Nutrition Applications. This article describes one such achievement, …

-

Barcode Scanning for a web based application

DATE:In this article I will share some information about a recent barcode scanning implementation we did for a web based application for one of our clients. Barcodes are nothing more than a machine readable form of data represented in the form of lines. Nowadays, barcodes are an essential part of inventory management for a number …

-

Device and Browser Testing Strategies

DATE:Testing without proper planning can cause major problems for an app release, as it can result in compromised software quality and an increase in total cost. Defining and following a suitable and thorough testing procedure is a very important part of the development process that should be considered from the very beginning. Time should be …

-

Web API security using JSON web tokens

DATE:Today data security during financial transactions is super important and critical. The protection of sensitive user data should be a major priority for developers working on applications that use financial or personal information of the clients. These days, many apps are accessed through multiple devices including desktops, laptops, mobile phones and tablets. Both web apps, …

-

Improve Performance of Web Applications

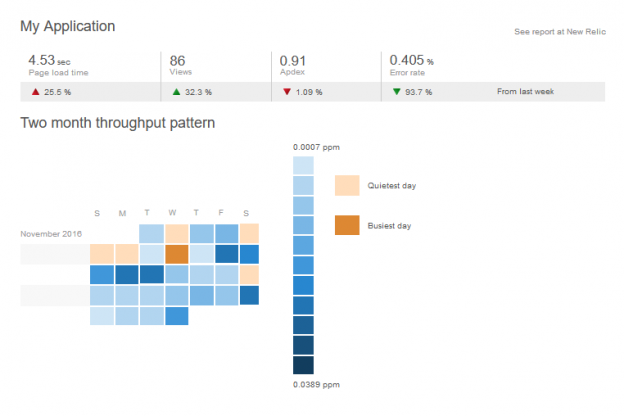

DATE:We all know how frustrating it is to see the progress spinning wheel going on and on while navigating through a web app. It’s due to these performance issues that users lose interest in a web application, which can hinder the success of the app. Improving performance is an important task for any app developer, …

-

How MetaSys handled performance Issues related to Entity Framework

DATE:In building web applications for clients, two important factors we at MetaSys focus on are performance, and speed of development. Good performance is crucial for the success of any web application, as users expect pages and screens to load instantly. Users will quickly stop using slow programs in favour of other web or mobile applications. …

How MetaSys handled performance Issues related to Entity Framework Read More »

-

How I cracked my MCSA Web Applications certification Exam

DATE:It has been a couple of months since I gained MCSA Certification Exam in Web Applications, and in this article, I will share my experience of preparing for and taking the exams. If you’re interested in getting MCSA certification, this article might give you an idea of how long you might need to prepare, and …

How I cracked my MCSA Web Applications certification Exam Read More »

-

InBody Integration for biometric and blood pressure data into a web application

DATE:People today are more health-conscious than ever before, and digital technology is playing an important role in this development. Thanks to modern technology, there are many tools and devices to measure and record physical characteristics that relate to personal health. Tracking exercise routines and nutrition has become a popular tool for individuals to keep up …

InBody Integration for biometric and blood pressure data into a web application Read More »

-

React Native vs Native apps development

DATE:React Native has some exciting features that make it popular in the developer community. Many popular apps such as Instagram, Facebook Ads Manager, Walmart, SoundCloud and Netflix are based on React Native. We have worked with both Native app development and React Native, and in this article we share our experience and give some examples. …