-

Best Practices for Performance Optimization in .NET Applications

DATE:At MetaSys, we had the opportunity to transition a legacy application, initially constructed with the .NET Framework 1.0, to the more modern .NET Core. We put into practice some of the relevant strategies detailed in this blog post. In a landscape where both the application and the business are in a state of evolution, the …

Best Practices for Performance Optimization in .NET Applications Read More »

-

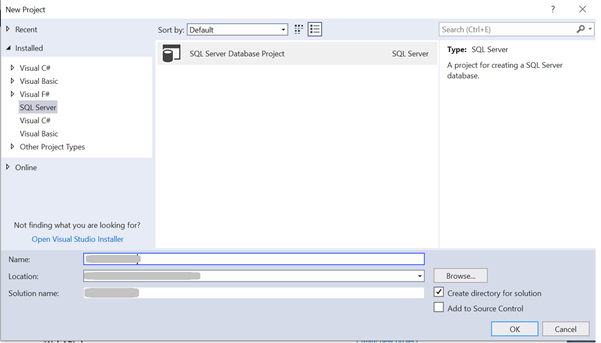

How to use a Database project in .NET Visual Studio?

DATE:Introduction The Database plays a vital role in any web or windows application development. The Database project can be used to maintain the repository of the schema changes. Why do we need a Database project? While developing any application, the developer has to work on many database tasks such as creating tables, triggers, stored procedures, …

How to use a Database project in .NET Visual Studio? Read More »

-

Device and Browser Testing Strategies

DATE:Testing without proper planning can cause major problems for an app release, as it can result in compromised software quality and an increase in total cost. Defining and following a suitable and thorough testing procedure is a very important part of the development process that should be considered from the very beginning. Time should be …

-

How MetaSys handled performance Issues related to Entity Framework

DATE:In building web applications for clients, two important factors we at MetaSys focus on are performance, and speed of development. Good performance is crucial for the success of any web application, as users expect pages and screens to load instantly. Users will quickly stop using slow programs in favour of other web or mobile applications. …

How MetaSys handled performance Issues related to Entity Framework Read More »

-

InBody Integration for biometric and blood pressure data into a web application

DATE:People today are more health-conscious than ever before, and digital technology is playing an important role in this development. Thanks to modern technology, there are many tools and devices to measure and record physical characteristics that relate to personal health. Tracking exercise routines and nutrition has become a popular tool for individuals to keep up …

InBody Integration for biometric and blood pressure data into a web application Read More »

-

Using the NReco pdf writing tool

DATE:These days financial, marketing and e-commerce websites allow us to download reports and receipts in pdf form. The Pdf file format is a convenient way of sharing information, as there is a high level of confidence that the user can open the document with the intended look and feel. This is even true for documents …