-

What is EWS?

DATE:Exchange Web Services is an Application Program Interface (API) by Microsoft that allows programmers to fetch Microsoft Exchange items including calendars, contacts and emails. It can be used to read the email box and retrieve emails along with all the metadata such as headers, body and attachments. This is useful when the same information needs …

-

Improve Performance of Web Applications

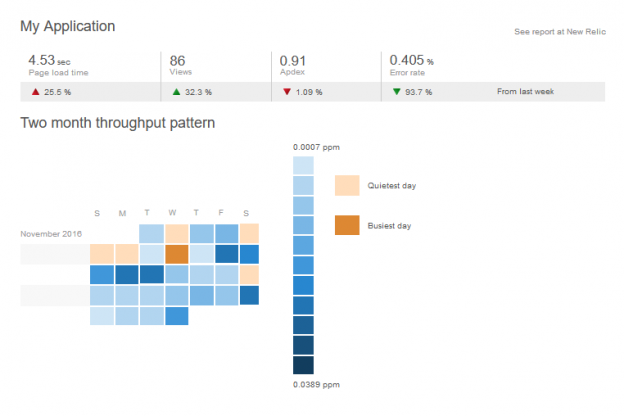

DATE:We all know how frustrating it is to see the progress spinning wheel going on and on while navigating through a web app. It’s due to these performance issues that users lose interest in a web application, which can hinder the success of the app. Improving performance is an important task for any app developer, …

-

How MetaSys handled performance Issues related to Entity Framework

DATE:In building web applications for clients, two important factors we at MetaSys focus on are performance, and speed of development. Good performance is crucial for the success of any web application, as users expect pages and screens to load instantly. Users will quickly stop using slow programs in favour of other web or mobile applications. …

How MetaSys handled performance Issues related to Entity Framework Read More »

-

InBody Integration for biometric and blood pressure data into a web application

DATE:People today are more health-conscious than ever before, and digital technology is playing an important role in this development. Thanks to modern technology, there are many tools and devices to measure and record physical characteristics that relate to personal health. Tracking exercise routines and nutrition has become a popular tool for individuals to keep up …

InBody Integration for biometric and blood pressure data into a web application Read More »

-

Using the NReco pdf writing tool

DATE:These days financial, marketing and e-commerce websites allow us to download reports and receipts in pdf form. The Pdf file format is a convenient way of sharing information, as there is a high level of confidence that the user can open the document with the intended look and feel. This is even true for documents …

-

A Case Study – Building a Dashboard using Google charts in ASP.NET

DATE:Tracking KPIs, metrics and any other relevant data is important for any business looking to improve their performance, and proper visualisation can be helpful for identifying trends and patterns. A useful information management tool is a dashboard, which can be used to provide a graphical summary of all relevant information. This article details a recent …

A Case Study – Building a Dashboard using Google charts in ASP.NET Read More »

-

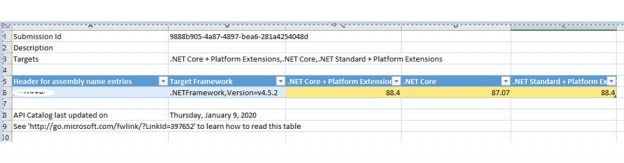

Converting an MVC web APP to .Net Core Web App

DATE:History Like many others, we have been working on MVC 5 based web applications since 2013. With Microsoft planning significant investment into the open-source development platform .Net core, we saw the advantage of migrating our current applications to the new platform sooner rather than later. The first version of .Net Core 1.0 was released by …