-

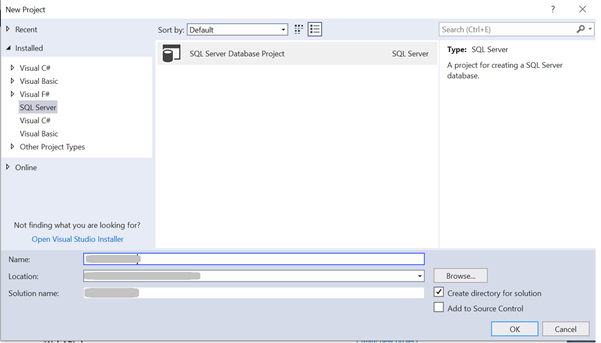

How to use a Database project in .NET Visual Studio?

DATE:Introduction The Database plays a vital role in any web or windows application development. The Database project can be used to maintain the repository of the schema changes. Why do we need a Database project? While developing any application, the developer has to work on many database tasks such as creating tables, triggers, stored procedures, …

How to use a Database project in .NET Visual Studio? Read More »

-

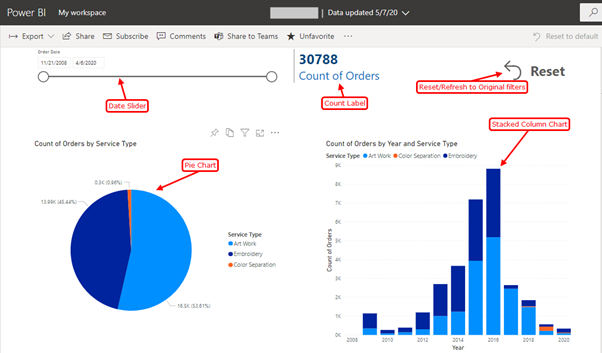

Power BI – A Visualization Tool which is easy to understand and develop

DATE:Power BI is a tool used for generating business intelligence reports, charts and graphs, that incorporate easy to understand visuals. It is a self-service BI tool that is particularly useful for data analysts who create and distribute BI reports throughout the organization. With moderate knowledge of SQL, one can develop simple power BI visuals after …

Power BI – A Visualization Tool which is easy to understand and develop Read More »

-

InBody Integration for biometric and blood pressure data into a web application

DATE:People today are more health-conscious than ever before, and digital technology is playing an important role in this development. Thanks to modern technology, there are many tools and devices to measure and record physical characteristics that relate to personal health. Tracking exercise routines and nutrition has become a popular tool for individuals to keep up …

InBody Integration for biometric and blood pressure data into a web application Read More »

-

SQL Server on Linux!? – Meet SQL Server 2017

DATE:Over the last decade, DATA is the NEW OIL. While the increasing amount of digitization has led to data exploding exponentially, several other factors have also contributed. The cost of data storage has dropped substantially; enterprises are unwilling to delete any of their data. DATA is now a Corporate Asset and archived NOT deleted. In …