-

Implementing Active Directory in a .NET application with Multiple Roles

DATE:The need for a directory of users arises when various devices are used on the same network. It is crucial to locate the directory on one central source, known as Active Directory. It helps validate and authenticate multiple users accessing all resources on the domain with a single sign-on. In this blog, we will demonstrate …

Implementing Active Directory in a .NET application with Multiple Roles Read More »

-

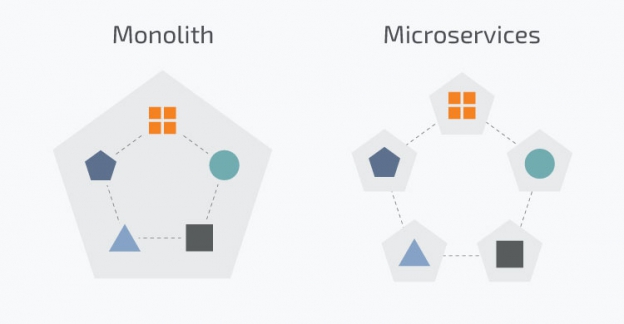

What, Why, and How of Microservices?

DATE:What are Microservices? Historically applications were Monolithic applications where the architecture was a unified and closely coupled integrated unit. Microservices, on the contrary, are smaller independent unified business modules. Each module in Microservices performs its own unique business functionality, at times with dedicated databases. As shown in the above image, the architecture of Microservices consists …

-

Device and Browser Testing Strategies

DATE:Testing without proper planning can cause major problems for an app release, as it can result in compromised software quality and an increase in total cost. Defining and following a suitable and thorough testing procedure is a very important part of the development process that should be considered from the very beginning. Time should be …

-

What is EWS?

DATE:Exchange Web Services is an Application Program Interface (API) by Microsoft that allows programmers to fetch Microsoft Exchange items including calendars, contacts and emails. It can be used to read the email box and retrieve emails along with all the metadata such as headers, body and attachments. This is useful when the same information needs …

-

Using the NReco pdf writing tool

DATE:These days financial, marketing and e-commerce websites allow us to download reports and receipts in pdf form. The Pdf file format is a convenient way of sharing information, as there is a high level of confidence that the user can open the document with the intended look and feel. This is even true for documents …

-

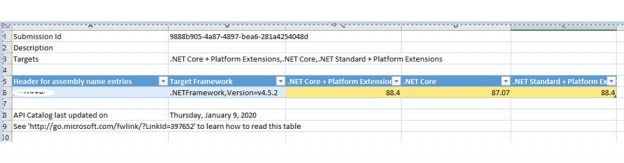

Converting an MVC web APP to .Net Core Web App

DATE:History Like many others, we have been working on MVC 5 based web applications since 2013. With Microsoft planning significant investment into the open-source development platform .Net core, we saw the advantage of migrating our current applications to the new platform sooner rather than later. The first version of .Net Core 1.0 was released by …